GLSL Optimizer

Today I integrated GLSL Optimizer to my shader code…

It had some quirks:

-

Doesn’t accept #line directives with name of the files, so I have to strip that when feeding it

-

Doesn’t accept OpenGL 3.30 shaders

This last one isn’t that bad, although I was using 3.30 shaders (the tutorials I was using were using them as well).

The problem with that I had some difficulty understanding the bindings between the actual vertex shader and the vertex buffer.

Previously I had something like this on the shader:

layout(location = 0) in vec3 vPos; layout(location = 1) in vec4 vColor; layout(location = 2) in vec2 vTex0;

And this on the code:

glBindBuffer(GL_ARRAY_BUFFER, vertexbuffer);

glVertexAttribPointer(

0, // attribute 0.

3, // size

GL_FLOAT, // type

GL_FALSE, // normalized?

sizeof(float)*9, // stride

(void*)0 // array buffer offset

);

glVertexAttribPointer(

1, // attribute 1.

4, // size

GL_FLOAT, // type

GL_FALSE, // normalized?

sizeof(float)*9, // stride

(void*)(3*sizeof(float)) // array buffer offset

);

glVertexAttribPointer(

2, // attribute 2.

2, // size

GL_FLOAT, // type

GL_FALSE, // normalized?

sizeof(float)*9, // stride

(void*)(7*sizeof(float)) // array buffer offset

);

So, it was explicit on the declaration of the shader which part of the VBO would be fed to which variable…

With 1.50 shaders, I have now:

attribute vec3 vPos; attribute vec4 vColor; attribute vec2 vTex0;

Which mean I should have to do something like:

glBindBuffer(GL_ARRAY_BUFFER, vertexbuffer);

glVertexAttribPointer(

glGetAttribLocation(program_id, "vPos"),

3, // size

GL_FLOAT, // type

GL_FALSE, // normalized?

sizeof(float)*9, // stride

(void*)0 // array buffer offset

);

glVertexAttribPointer(

glGetAttribLocation(program_id, "vColor"),

4, // size

GL_FLOAT, // type

GL_FALSE, // normalized?

sizeof(float)*9, // stride

(void*)(3*sizeof(float)) // array buffer offset

);

glVertexAttribPointer(

glGetAttribLocation(program_id, "vTex0"),

2, // size

GL_FLOAT, // type

GL_FALSE, // normalized?

sizeof(float)*9, // stride

(void*)(7*sizeof(float)) // array buffer offset

);

But this seems awfully slow (there’s a text lookup there somewhere), or it means I have to make the vertex declaration part of the material, instead of part of the mesh (as it is currently).

But when I ran the program without that, it worked fine… So there must be some way the system is doing the actual binds with indexes…

After mucking around, I found out that it seems the system uses the sequential order.

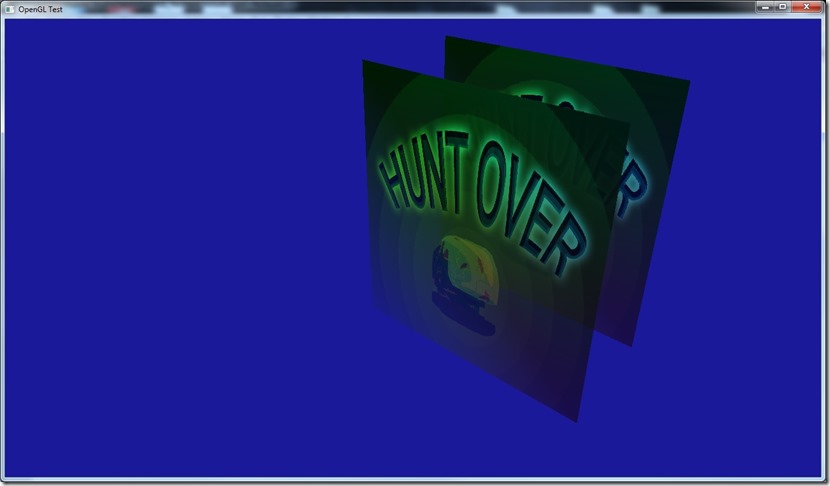

So, if I declare it like above, I get:

But if I instead declare:

attribute vec3 vPos; attribute vec2 vTex0; attribute vec4 vColor;

I get:

Which is what I expected if I swap the texture with the color…

So, the order is important (which is what I assume in the rest of the engine, so no problems there)…

I’m still worried that on other devices/video cards this will work in another way which screws up my system, but I’ll implement it like this for now and wait for testing to see if I should swap things around…

There’s a lot of stuff on OpenGL I don’t understand yet… Especially because I feel there’s a whole lot of stuff that shouldn’t work, but works perfectly… So I really need to get some tests going, at least in the 3 main vendors (ATI, nVidia, Intel).

The good part is that GLSL Optimizer works like a charm, it builds a nice optimized shader as I wanted. The only inconvenience is that it doesn’t have proper line numbers (so there’s no match between the original shader and the optimized one, in case there’s problems), but I built a work around on that for development purposes, which compiles the un-optimized shader first (detects syntax errors, etc), and if that succeeds, then it builds the optimized… Under the release version, I can remove this, of course…

Tomorrow I’ll start working on putting the OpenGL into Cantrip, see if I can do it in the next few days… At the time of writing, next week I’m going to the Netherlands for work, so I wanted to have this done by then… At the time of reading, I came back yesterday! ![]()

Now listening to “Believe in Nothing” by “Paradise Lost”

Comment

You must be logged in to post a comment.